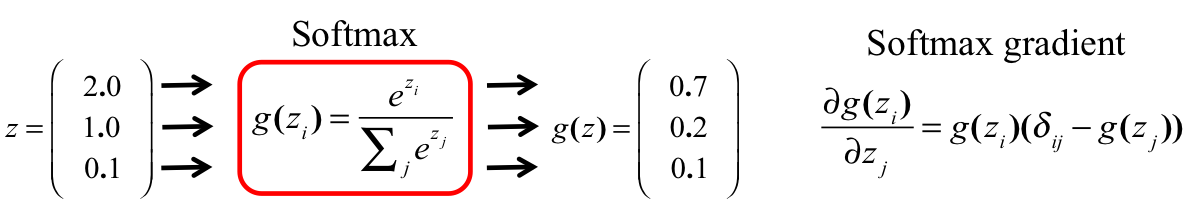

[ g,dg ] = activation( z,type ) %activation function %input: %z: input for atviation function %output: % g is the value of the activation function % dg is the gradient of g with respective to z [n1,n2]=size(z); ez=exp(z); ezsum=sum(ez,1); g=ez./repmat(ezsum,[n1,1]); dg=zeros(n1,n1,n2); for i=1:n2 dg(:,:,i)=diag(g(:,i))-g(:,i)*g(:,i)'; end Backpropagation in gradientnn.m [~,dg]=activation(zz{iter}); %%%----- in=dg[i].*in (in book) ------%%% if iter ==layer_num-1 %output layer use softmax for i=1:M in(:,i)=dg(:,:,i)'*in(:,i); end end

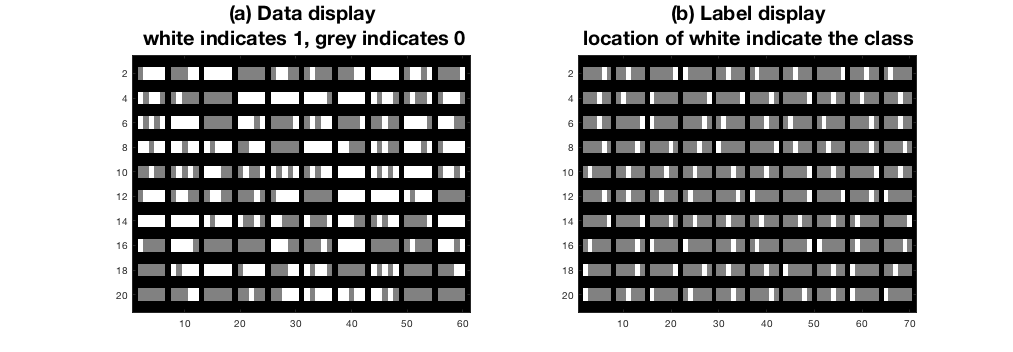

Figure 1: (a) Display of input vectors, (b) Corresponding labeling.